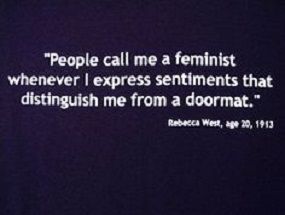

Feminism Quotes And Sayings

Feminism is a word that implies women who are advocating for equal rights in society, and this term dates back around a century to a time when women could not vote, own property, or engage in business in most cases. Equal rights for women has become expected in the USA, but in many countries around the world women are still considered inferior or even the property of male relatives and they have few if any rights.

-

View / Add Comments (0)

View / Add Comments (0) -

View / Add Comments (0)

View / Add Comments (0) -

This is what sexual liberation chiefly accomplishes-it liberates young women to pursue married men

View / Add Comments (0) -

Feminism is an entire world view or gestalt, not just a laundry list of women's issues

View / Add Comments (0) -

View / Add Comments (0)

View / Add Comments (0) -

View / Add Comments (0)

View / Add Comments (0) -

View / Add Comments (0)

View / Add Comments (0) -

View / Add Comments (0)

View / Add Comments (0) -

View / Add Comments (0)

View / Add Comments (0) -

Feminism was established to allow unattractive women easier access to the mainstream

View / Add Comments (0)

Quotes About Feminism